Pretrial Risk Assessment Explained

Do pretrial risk assessment tools replace a judge's discretion?

No. Judges and court commissioners emphasize that pretrial risk assessment tools are just one piece of information they take into account when making bail decisions.

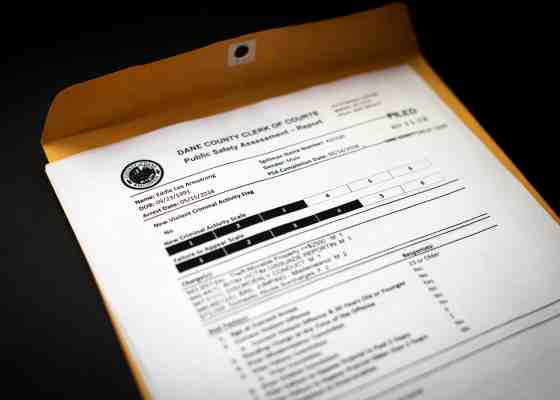

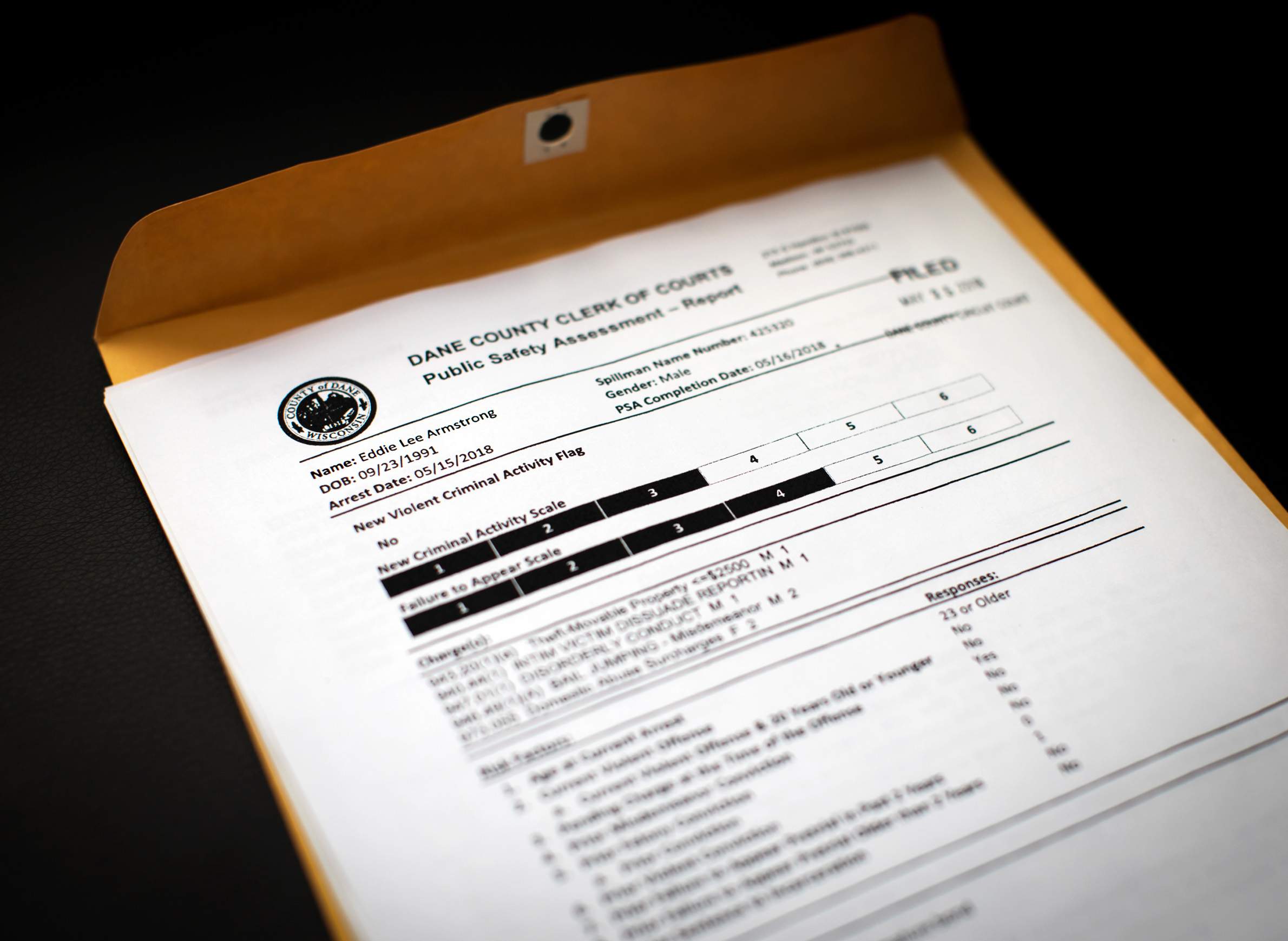

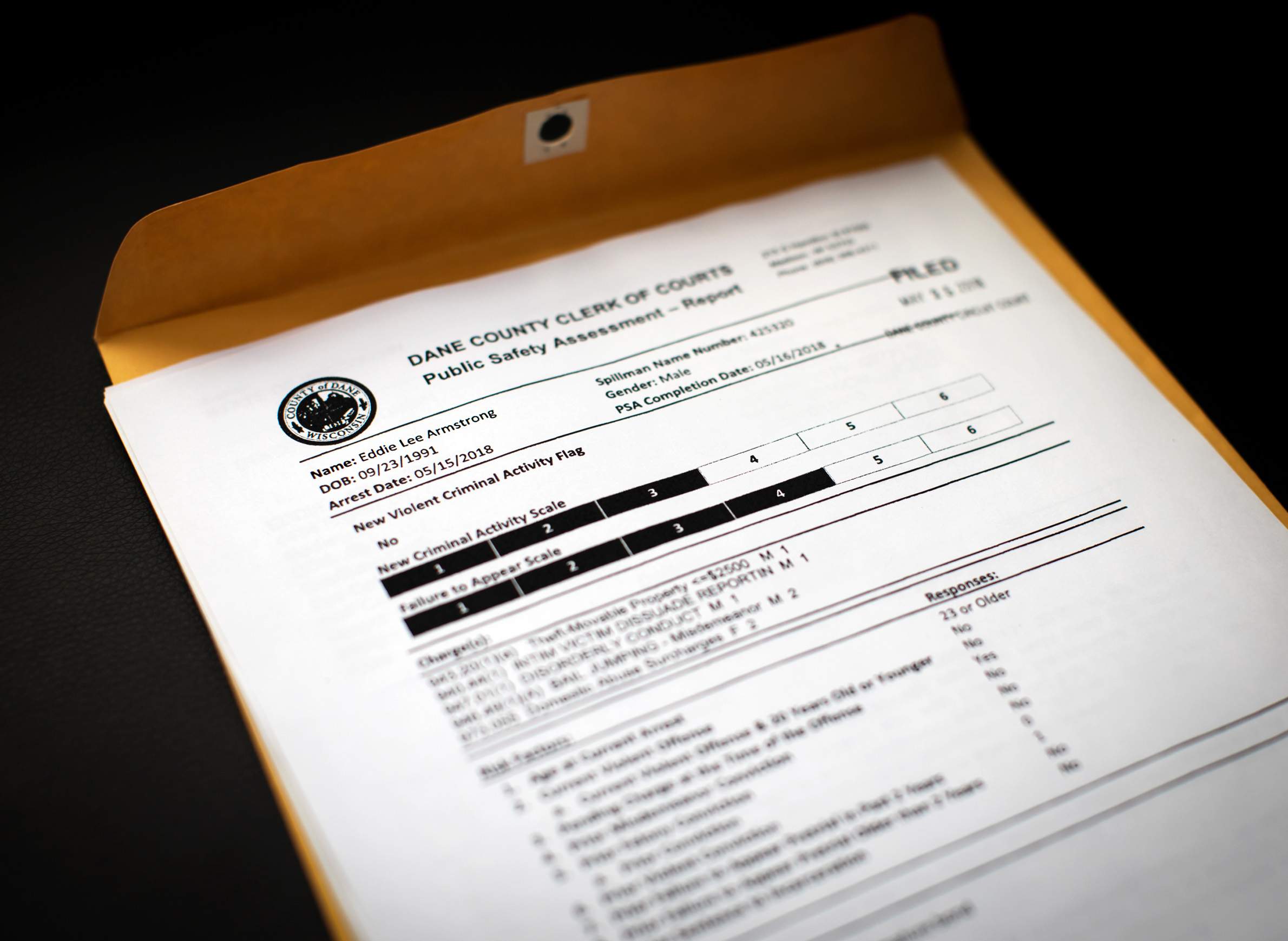

During bail hearings in Dane and Milwaukee counties — which are both using a risk assessment tool called the Public Safety Assessment — court commissioners also hear bail arguments from defense attorneys and prosecutors.

Are risk assessments used only for bail decisions? How many risk assessment tools are there?

There are many different risk assessment tools, used at various points in the criminal justice system including during bail hearings, sentencing, probation determinations and parole decisions. As of 2017, there were as many as 60 risk assessment tools used across the United States, according to a report by the Center for Court Innovation, a nonprofit that conducts research and assists with criminal justice reform efforts around the world.

The tools can be used to connect offenders to the right rehabilitation programs, determine appropriate levels of supervision like electronic monitoring, reduce the sentences of offenders who pose low risks of recidivism and divert low-risk offenders from jail or prison.

Some risk assessment tools are better-designed than others, so if one is not working well, that is not a reflection on all of the tools. Each tool should be validated, meaning it needs to be tested for accuracy on the population of the jurisdiction where it will be used.

Do we know how risk assessment tools work?

Sometimes. One risk assessment tool called the Correctional Offender Management Profiling for Alternative Sanctions, or COMPAS, faced controversy in 2016 because of its proprietary nature.

In a case that went to the Wisconsin Supreme Court, the tool's creator, then called Northpointe, Inc., would not reveal how the COMPAS algorithm determined its risk scores or how it weighted certain factors, citing trade secrets. A Wisconsin man who was sentenced to six years — in part because of a COMPAS score — argued that the secretive nature of the tool violated his due process rights. The court upheld the use of the tool but outlined precautions for judges to consider.

Other tools, including the PSA, are more transparent. The risk factors the PSA uses and how they are weighted when calculating risk are publicly available. Another tool, The Virginia Pretrial Risk Assessment Instrument, or VPRAI, is also transparent about its methods.

Still, Logan Koepke, senior policy analyst at Upturn, a nonprofit that researches and advocates for technologies that promote equity in the criminal justice system, said even Arnold Ventures, which developed the PSA, does not reveal the data it uses to create the algorithm, which includes about 750,000 cases from across the United States. He would like a "full line of sight" into the datasets used to create any pretrial risk assessment tool.

Didn't ProPublica prove that one pretrial risk assessment was biased against black defendants?

It's complicated.

In 2016, ProPublica found that COMPAS was "particularly likely to falsely flag black defendants as [potential] future criminals, wrongly labeling them this way at almost twice the rate as white defendants."

Because black defendants were disproportionately affected by these "false positives," ProPublica concluded the algorithm was "biased against blacks."

Researchers from California State University, the Administrative Office of the U.S. Courts and the Crime and Justice Institute challenged ProPublica's analysis. When they re-analyzed ProPublica's data, they found no evidence of racial bias.

COMPAS predicted risk correctly at essentially the same rates for both black and white defendants, they found. For example, for defendants who were rated high risk, 73 percent of white defendants reoffended and 75 percent of black defendants reoffended.

But when one group — in this case, black defendants — has a higher base rate of recidivism, that group is mathematically guaranteed to have more false positives, said Sharad Goel, an assistant professor at Stanford University in management science and engineering.

But that does not mean the algorithm itself is biased; it reflects "real statistical patterns" driven by "social inequalities" in the justice system, Goel said.

"If black defendants have a higher overall recidivism rate, then a greater share of black defendants will be classified as high risk … [and] a greater share of black defendants who do not reoffend will also be classified as high risk," Goel said in a 2016 column he co-wrote in the Washington Post.

One way to reduce the differences would be to systematically score white defendants as riskier than they actually are, but then the algorithm would be treating defendants differently based on race, he said.

If racial disparities in the criminal justice system were to be corrected — a big task that is outside the scope of a single tool — better data could be put into the algorithms, said Chris Griffin, consultant to Harvard Law School’s Access to Justice Lab, which is doing research in Dane County on the PSA.

Early data from the PSA show more black and Latino defendants are released pretrial when a risk assessment tool is being used, according to Arnold Ventures. According to the Pretrial Justice Institute, both the PSA and the VPRAI are capable of producing race-neutral results.

Will pretrial risk assessment tools result in "throngs of violent criminals" being released back into the community?

Data show that the vast majority of defendants are low risk when they are released pretrial, meaning most show up for court and do not commit new crimes. Data have also shown that 98 percent or more of pretrial defendants do not commit new violent crimes when released.

Risk assessment tools recommend the release of the low-risk defendants and the detention of the high risk, potentially violent offenders. Counties get to choose where to draw the line on how much risk they are willing to accept.

In any system, mistakes will be made because it is impossible to predict future human behavior with 100 percent accuracy. In a system without risk assessment tools, dangerous defendants can post bail and commit a new violent crime while released.

The only way to prevent all new violent criminal activity among defendants awaiting trial would be to detain everyone. That would be unconstitutional.

The nonprofit Wisconsin Center for Investigative Journalism collaborates with Wisconsin Public Radio, Wisconsin Public Television, other news media and the UW-Madison School of Journalism and Mass Communication. All works created, published, posted or disseminated by the Center do not necessarily reflect the views or opinions of UW-Madison or any of its affiliates.

This report is the copyright © of its original publisher. It is reproduced with permission by WisContext, a service of PBS Wisconsin and Wisconsin Public Radio.