What Effects Do Pretrial Risk Assessments Have On Racial Biases In Justice System?

As states around the country are starting to rethink their use of bail, many are turning to algorithms — but critics claim they can be racially biased.

Research shows that bail-setting practices are already far from equitable. A 2003 Bowling Green State University study found African-American and Latino defendants were more than twice as likely as white defendants to get stuck in jail because they could not post bail — a study that has been cited by other researchers more than 100 times.

Similarly, a 2007 study published in a peer-reviewed journal at the University of Central Missouri found that African-American and Latino defendants were less than half as likely to be able to afford the same bail amounts as white defendants.

But whether these computer algorithms will worsen or help correct these disparities is the subject of heated debate.

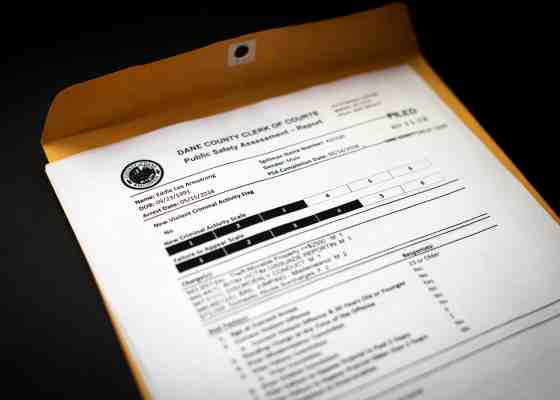

The tools, called pretrial risk assessments, use facts about defendants, including their criminal history or past attendance at court dates, to predict how likely they are to commit a new crime or skip out on court if released before trial.

Dane and Milwaukee counties use Public Safety Assessments, one such tool, to help make some or all bail decisions. Seven other counties — Chippewa, Eau Claire, La Crosse, Marathon, Outagamie, Rock and Waukesha — have agreed to pilot PSAs.

Most defendants are low risk, so the hope is that the tools will encourage judges to grant pretrial release to more low-income defendants, who can get stuck in jail on minor offenses only because they are poor and cannot afford bail.

But the tools have come under fire from nearly 120 criminal justice organizations which signed a July 2018 statement arguing that pretrial risk assessment tools can reinforce racial inequality in the criminal justice system.

Monique Dixon, deputy director of policy and senior counsel for the NAACP Legal Defense and Educational Fund, called the algorithms a "tool of oppression" for people of color.

The Pretrial Justice Institute, which advocates for "safe, fair and effective" pretrial justice practices, asserts the opposite: that such tools can "substantially reduce the disparate impact that people of color experience."

The Community Corrections Collaborative Network — a network comprised of associations that represent more than 90,000 probation, parole, pretrial and treatment professionals around the country — also supports the use of the tools.

How can these organizations — which are all dedicated to fairness in criminal justice — come to such contrary conclusions about the tools?

No one actually knows for sure what effect such risk assessment will have on existing racial disparities, said Chris Griffin, former research director at Harvard Law School's Access to Justice Lab, which is conducting a study of risk assessment in Dane County.

"We just don't know whether the problems with the current system are better or worse than the problems that might be there with the tool in place," Griffin said.

Tools will not 'wreak havoc'

So why not avoid using the tools altogether?

Chris Griffin said if counties never implement the tools, researchers will never be able to test them to see if they help the system. That is what Access to Justice Lab researchers are doing in Dane County.

"I sympathize with that bind of, 'Should you put this out in the world before you know whether it works or not?'" Griffin said. "And my answer is yes, but if and only if you have enough evidence to suggest that it won't wreak havoc."

Sharad Goel, an assistant professor in management science and engineering at Stanford University, said computer scientists have not yet found evidence that the algorithms are racially biased. Some data suggest the tools are not discriminatory, he added.

In Kentucky, the PSA predicted equally well for both black and white defendants in terms of their risk of committing a new crime or new violent crime, according to a 2018 study by researchers from the Research Triangle Institute, an independent nonprofit "dedicated to improving the human condition."

When the PSA was implemented in Yakima County, Washington, the county saw a decrease in racial disparities. The release rate for white defendants stayed the same, while the release rate for Latinos, African-Americans, Asians and Native Americans increased.

Arnold Ventures, which developed the PSA, claims it is race and gender neutral. When calculating risk scores, the PSA does not consider a person’s ethnicity, income, education level, employment or neighborhood — factors that some fear could lead to discriminatory outcomes.

Arnold Ventures, formerly known as the Laura and John Arnold Foundation, plans additional testing in this area to get more definitive answers.

"It's absolutely top of mind for us, to be able to ensure that the bail reform efforts that include risk assessments end up with results that reduce any racial disparities," said Jeremy Travis of Arnold Ventures.

Cementing in disparities remains 'real risk'

Organizations opposing risk assessment have said such tools can be based on U.S. criminal justice data in which racial inequalities are embedded. When this biased data goes into the algorithm, it can turn into a feedback loop, cementing in racial disparities.

"Even when you're just including criminal records, due to the racial disparities that we have in the criminal legal system, there ends up being a lot of bias that is leaked into these algorithms," said Thea Sebastian, policy counsel for the Civil Rights Corps, one of the organizations that signed the statement.

Goel acknowledged that recreating biases is a "real risk," but that does not mean the algorithms are biased.

The Community Corrections Collaborative Network said the results of risk assessment tools "reflect the reality that bias exists within the criminal justice system," and the tools themselves do not have the power to mitigate the discrimination entrenched in our current system.

But even a tool that uses data from a "tainted" system, could end up letting more black and Latino defendants out pretrial, Chris Griffin said.

At the very least, Goel said, risk assessment tools are probably less biased than judges, since human decision makers alone are "particularly bad.'

"You have to look at what the status quo is. We have ... decision makers that are acting on their own biases," Goel said. "It's such a low bar right now in terms of improving decisions that I think these can have a positive effect."

The nonprofit Wisconsin Center for Investigative Journalism collaborates with Wisconsin Public Radio, Wisconsin Public Television, other news media and the UW-Madison School of Journalism and Mass Communication. All works created, published, posted or disseminated by the Center do not necessarily reflect the views or opinions of UW-Madison or any of its affiliates.

This report is the copyright © of its original publisher. It is reproduced with permission by WisContext, a service of PBS Wisconsin and Wisconsin Public Radio.